As the CEO of Bytewax, I'm thrilled to announce the release of our latest module: bytewax-redis.

Modules are connectors and operators that enhance Bytewax with faster development with pre-built modules and more enhanced processing with advanced operators. For more details, you can visit the modules page on our website.

This integration combines the AI-native stream processing capabilities of Bytewax with the accessibility and flexibility of Redis. A great mix for feature pipelines and live inference.

Why Redis?

Redis has long been known for its speed, simplicity, and versatility as an in-memory data store. Its support for data structures like strings, hashes, lists, sets, and streams makes it an ideal candidate for a feature store—a centralized repository for serving up-to-date feature values to machine learning models in real-time.

By leveraging Redis as a feature store, organizations can:

- Accelerate Model Inference: Serve features to models with minimal latency.

- Simplify Architecture: Use a single system for both data storage and message streaming.

- Enhance Scalability: Easily scale Redis to handle increasing workloads.

bytewax-redis: The Perfect Pairing

Our new bytewax-redis module allows you to seamlessly integrate Redis streams and key-value stores into your Bytewax dataflows. Whether you're consuming data from Redis streams, writing processed data back to them, or interacting with Redis' key-value storage, bytewax-redis has you covered.

If you are looking for the code shown in this post, you can find it in a single file in the examples directory in the GitHub repository.

Key Features

- Redis Stream Source: Consume data from Redis streams as part of your dataflow.

- Redis Stream Sink: Output processed data to Redis streams.

- Redis Key-Value Sink: Write data directly to Redis key-value stores.

Getting Started: A Quick Example

Let's dive into a practical example that showcases how to use bytewax-redis in your Bytewax dataflows. We'll walk through three separate dataflows:

- Stream Producer: Writes data to a Redis stream.

- Stream Consumer: Reads data from the Redis stream.

- Key-Value Producer: Writes key-value pairs to Redis.

Setting Up

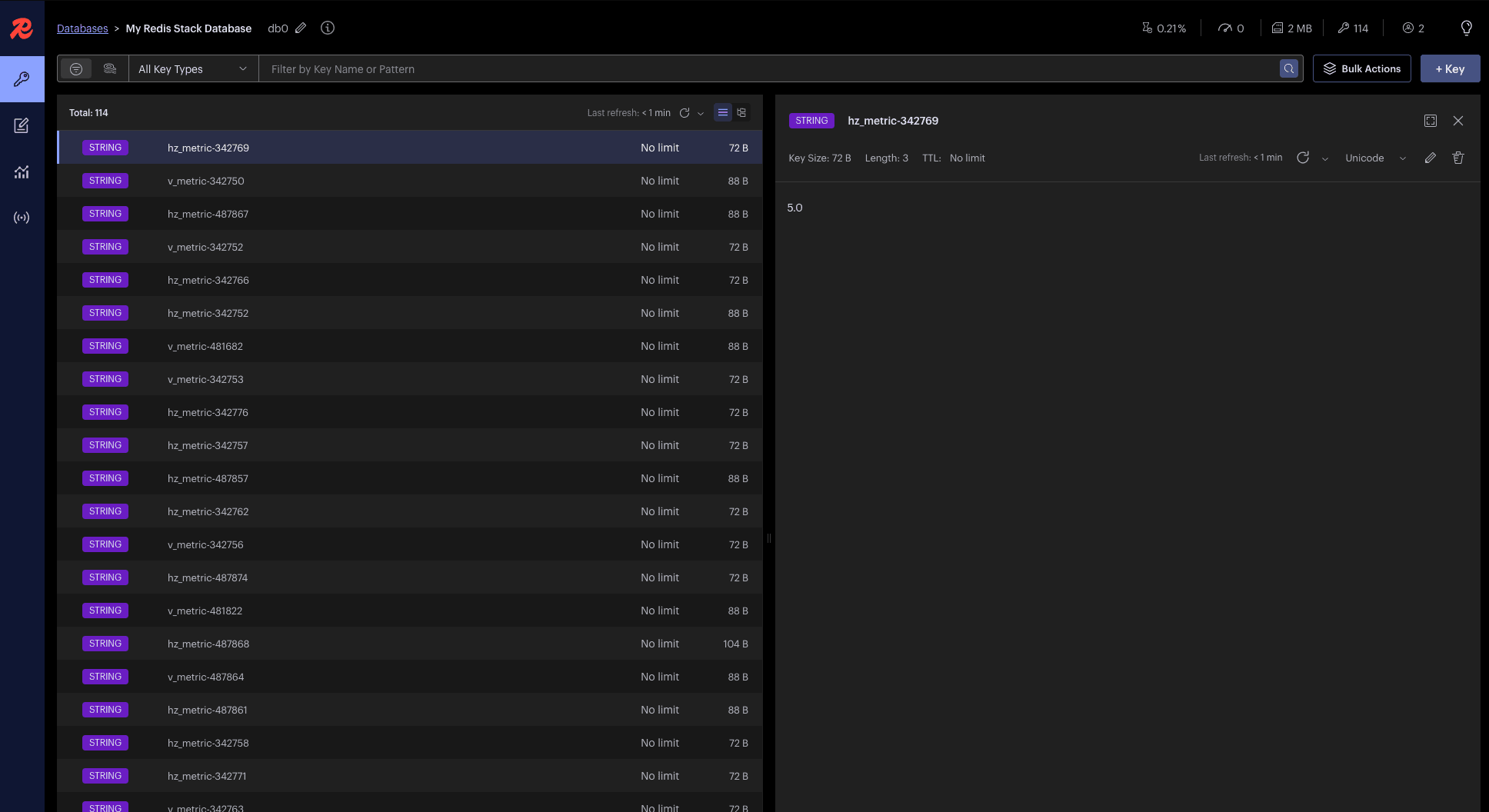

First, ensure you have Redis running locally or update the REDIS_HOST and REDIS_PORT variables to point to your Redis instance. I like to use redis stack which comes with a UI for debugging.

docker run -d --name redis-stack -p 6379:6379 -p 8001:8001 redis/redis-stack:latest

Next, you can install the module with pip.

pip install bytewax-redis

Now, in a dataflow file titled redis_connector.py you can configure the environment variables of a remote Redis instance or the local one you just ran in docker.

import os

REDIS_HOST = os.environ.get("REDIS_HOST", "localhost")

REDIS_PORT = int(os.environ.get("REDIS_PORT", 6379))

REDIS_DB = int(os.environ.get("REDIS_DB", 0))

REDIS_STREAM_NAME = os.environ.get("REDIS_STREAM_NAME", "example-stream")

Stream Producer Dataflow

This dataflow writes a series of dictionaries to a Redis stream.

from bytewax import operators as op

from bytewax.bytewax_redis import RedisStreamSink

from bytewax.dataflow import Dataflow

from bytewax.testing import TestingSource

stream_producer_flow = Dataflow("redis-stream-producer")

stream_data = [

{"field-1": 1},

{"field-1": 2},

{"field-1": 3},

{"field-1": 4},

{"field-2": 1},

{"field-2": 2},

]

stream_inp = op.input("test-input", stream_producer_flow, TestingSource(stream_data))

op.inspect("redis-stream-writing", stream_inp)

op.output(

"redis-stream-out",

stream_inp,

RedisStreamSink(REDIS_STREAM_NAME, REDIS_HOST, REDIS_PORT, REDIS_DB),

)

Run the Stream Producer:

python -m bytewax.run redis_connector.py:stream_producer_flow

Stream Consumer Dataflow

We can read the data from Redis Streams in a new section of the same dataflow titled redis_connector.py. This dataflow simply reads the data from the Redis stream and prints it.

from bytewax.bytewax_redis import RedisStreamSource

consumer_flow = Dataflow("redis-consumer")

consumer_inp = op.input(

"from_redis",

consumer_flow,

RedisStreamSource([REDIS_STREAM_NAME], REDIS_HOST, REDIS_PORT, REDIS_DB),

)

op.inspect("received", consumer_inp)

Run the Stream Consumer:

To run the consumer, we point bytewax to run our consumer_flow entrypoint in the same file.

python -m bytewax.run redis_connector.py.py:consumer_flow

Key-Value Producer Dataflow

We can also showcase a final capability that will write data to the Sink in the same dataflow. This dataflow simply writes key-value pairs to Redis.

from bytewax.bytewax_redis import RedisKVSink

kv_producer_flow = Dataflow("redis-kv-producer")

kv_data = [

("key-1", 1),

("key-1", 2),

("key-2", 1),

("key-1", 3),

("key-2", 5),

]

kv_inp = op.input("test-input", kv_producer_flow, TestingSource(kv_data))

op.inspect("redis-kv-writing", kv_inp)

op.output("redis-kv-out", kv_inp, RedisKVSink(REDIS_HOST, REDIS_PORT, REDIS_DB))

Run the Key-Value Producer:

python -m bytewax.run redis_connector.py:kv_producer_flow

Real-Time AI and Machine Learning with Redis and Bytewax

By combining Bytewax's robust data processing with Redis's speed and versatility, you can build systems that respond to data in real-time, a critical requirement for modern AI and machine learning applications.

Use Cases

- Feature Engineering Pipelines: Compute and update features in real-time as new data arrives.

- Online Learning Systems: Train models incrementally using streaming data.

- Real-Time Analytics: Perform live analytics and update dashboards instantly.

Conclusion

The release of bytewax-redis marks a significant step toward simplifying the development of real-time AI and machine learning applications. We're excited to see what you'll build with this powerful integration.

Get Started Today

To start using bytewax-redis, install it via pip:

pip install bytewax-redis

Check out our GitHub repository for more examples and documentation.

More on Redis ❤️ Bytewax

Blog: Redis-driven Dataflow for Clickstream Aggregation

Video: Bytewax + Redis | Real-time streaming for AI

🐝 Thank you for being a part of the Bytewax community. We're committed to making real-time data processing accessible and efficient for developers everywhere. If you like this content, subscribe to us on substack or give us a ⭐️ on GitHub!

The Rise of The Streaming Data Lakehouse